Slow transfer speed due to SSD cache acceleration

- galosu82

- Starting out

- Posts: 34

- Joined: Thu Aug 06, 2020 3:12 pm

Re: Slow transfer speed due to SSD cache acceleration

Guys, I'm not fighting. If you know of a way to test the SSD cache speed by being connected to the NAS via a 1 GbE connection, please feel free to let me know. It's certainly a limitation of mine, but I don't know it.

-

Furyous

- Starting out

- Posts: 22

- Joined: Sat Mar 16, 2019 2:48 am

Re: Slow transfer speed due to SSD cache acceleration

I showed that in a prior post, even included a graph, where if the cache is turned on, the transfer from a one gig connection keeps dipping down to almost nothing, turning off the cache allows full use of the connection without slowdowns.

- galosu82

- Starting out

- Posts: 34

- Joined: Thu Aug 06, 2020 3:12 pm

Re: Slow transfer speed due to SSD cache acceleration

The Windows file copy dialog isn't a reliable tool to test network or drive performances. There are specific tools to do that: CrystalDiskMark, AJA System Test, Blackmagic Disk Speed Test (part of Blackmagic Desktop Video software).

-

pokrakam

- Know my way around

- Posts: 101

- Joined: Wed Sep 17, 2008 5:27 am

Re: Slow transfer speed due to SSD cache acceleration

As I said, lots of small accesses will drastically reduce performance compared to a single large copy due to the administration and seek overhead. So thats's one scenario. Shifting things around on the NAS itself is another scenario. If I copied stuff from one drive to another it would slow down to about a quarter of the speed with the cache switched on. Granted that's not using the network, but still...

Try running a windows VM from a network share. The effect is very noticeable over a 1GB connection. While it still wasn't brilliant, caching was a dramatic improvement from uncached (from memory I think it took over 15 minutes just to boot up). Here many small disk accesses play a role. The improvement lasts until we hit the 'cache wall' when everything goes to pot and you're way worse off.

-

sohouser1

- New here

- Posts: 6

- Joined: Wed Jan 16, 2019 1:12 am

Re: Slow transfer speed due to SSD cache acceleration

Umm... Hate to disagree here, but it ABSOLUTELY should give benefits for a single PC at 1g. I don't care much about transfer rate, I care greatly about latency. My 5400 rpm drives have a latency around ~10ms or higher in practice, and gets higher with load. Even a crappy SSD has sub 100 microsecond latency, and it doesn't vary with load other than queing. I run quite a few VM's via NFS to my qnap, and although they don't generally transfer much data, they are highly latency sensitive. (Tons of very small read/writes, and often strictly sequential in nature)galosu82 wrote: ↑Wed Feb 09, 2022 2:16 pm You are missing my point: SSD cache gives no benefits in read/write operations happening from a single PC connected via Gigabit Ethernet. That’s a (pretty obvious) fact, no matter if the feature itself is working or not. Actually, I’m wondering how can you test if that feature is working if you are unable to read/write from the NAS at speeds higher than 120 MB/sec. I’m not explaining you what your should worry or not worry about. I’m just describing how a 1 GbE network is not a good scenario to take advantage of SSD cache (unless you have more than 4 workstations constantly reading/writing to/from the NAS).

Anyway, I've tried qtier previously and it was a disaster once the ssd's were full (worked great until that point) I"m going to pull out some of my 2.5" drives in my 963 and try qtiering again now that it MIGHT be fixed.

- dolbyman

- Guru

- Posts: 35251

- Joined: Sat Feb 12, 2011 2:11 am

- Location: Vancouver BC , Canada

Re: Slow transfer speed due to SSD cache acceleration

If it's latency for random (vs. sequential) operation you are after .. 10GbE should still be of interest

https://www.researchgate.net/figure/Mea ... _266489212

https://www.researchgate.net/figure/Mea ... _266489212

- galosu82

- Starting out

- Posts: 34

- Joined: Thu Aug 06, 2020 3:12 pm

Re: Slow transfer speed due to SSD cache acceleration

sohouser1 wrote: ↑Sat Feb 19, 2022 2:27 amUmm... Hate to disagree here, but it ABSOLUTELY should give benefits for a single PC at 1g. I don't care much about transfer rate, I care greatly about latency. My 5400 rpm drives have a latency around ~10ms or higher in practice, and gets higher with load. Even a crappy SSD has sub 100 microsecond latency, and it doesn't vary with load other than queing. I run quite a few VM's via NFS to my qnap, and although they don't generally transfer much data, they are highly latency sensitive. (Tons of very small read/writes, and often strictly sequential in nature)galosu82 wrote: ↑Wed Feb 09, 2022 2:16 pm You are missing my point: SSD cache gives no benefits in read/write operations happening from a single PC connected via Gigabit Ethernet. That’s a (pretty obvious) fact, no matter if the feature itself is working or not. Actually, I’m wondering how can you test if that feature is working if you are unable to read/write from the NAS at speeds higher than 120 MB/sec. I’m not explaining you what your should worry or not worry about. I’m just describing how a 1 GbE network is not a good scenario to take advantage of SSD cache (unless you have more than 4 workstations constantly reading/writing to/from the NAS).

Anyway, I've tried qtier previously and it was a disaster once the ssd's were full (worked great until that point) I"m going to pull out some of my 2.5" drives in my 963 and try qtiering again now that it MIGHT be fixed.

The fix is related to SSD cache. I’m not sure about Qtier.

-

blusk06

- Getting the hang of things

- Posts: 68

- Joined: Tue Nov 12, 2013 6:56 am

Re: Slow transfer speed due to SSD cache acceleration

I've gone through this whole thread.

It looks like in QTS 5.0.1, they stopped using flashcache. /proc/flashcache/CG0 does not exist. In fact, the only thing in /proc/flashcache directory is a file called "flashcache_version".

I tried modifying the vm parameters as recommended by cryptochrome. I had to set them using sysctl -w so they were persistent through a reboot.

Still when my cache is full I get sporadic write and read performance. I have 2x 2TB M.2 NVMe drives in a QM2-2P-384A, RAID1.

When cache is emptied my write and read performance max out my line speed which is 5GbE.

Yes, I do have my SSD cache set up to cache ALL IO's as I want it to accelerate large write performance.

I am using a TVS-872N with 8x 8TB drives in RAID6 (with one being a spare, 7 active drives).

I don't see any way to adjust the fallow settings now in QTS 5.0.1. There has got to be some way to edit the configuration (at the root shell) of whatever QNAP is using to implement SSD cache, to increase the rate at which it flushes dirty cache items.

There's a command /sbin/qcli_cache, but this doesn't expose anything useful either it seems

So, I got to thinking, maybe they implemented caching using LVM and dm-cache/lvmcache...

If they are, I can tune it. See the man page for lvmcache: https://man7.org/linux/man-pages/man7/lvmcache.7.html

Looking in /etc/lvm... there is no profiles directory. And the lvm.conf file doesn't have any useful caching parameters.

When I list my lv's and add the CacheSettings column, its BLANK! vg256/lv256 is my cache volume.

Finally, I disabled the cache, and instantly ran a "ps -ef | grep -i cache" on the system and these interesting processes popped out at me:

So now, I need to fill my cache up again and try these 2 things.

One, I will change /etc/lvm/lvm.conf and uncomment this line and see if it makes any difference.

If that doesn't make any difference, I will use this parameter in /etc/lvm/lvm.conf to set the allocation/cache_mode to "cleaner" per the lvmcache man page linked above.

I will also play around with lvchange and the cachepolicy and cachesettings arguments. Something like this:

I am a software engineer who works on storage products for a very big company (bigger and better products than QNAP...). I am very experienced with debugging and customizing Linux.

I feel like there must be ways to tune this more finely than QNAP offers in the GUI.

It looks like in QTS 5.0.1, they stopped using flashcache. /proc/flashcache/CG0 does not exist. In fact, the only thing in /proc/flashcache directory is a file called "flashcache_version".

Code: Select all

[/proc/flashcache] # ls -la

total 0

dr-xr-xr-x 3 admin administrators 0 2023-04-12 11:34 ./

dr-xr-xr-x 600 admin administrators 0 2023-04-12 05:22 ../

-r--r--r-- 1 admin administrators 0 2023-04-12 11:34 flashcache_versionCode: Select all

[/sbin] # cat /proc/sys/vm/dirty_ratio

50

[/sbin] # cat /proc/sys/vm/dirty_background_bytes

1

[/sbin] # cat /proc/sys/vm/dirty_expire_centisecs

6000

[/sbin] # cat /proc/sys/vm/dirty_writeback_centisecs

100When cache is emptied my write and read performance max out my line speed which is 5GbE.

Yes, I do have my SSD cache set up to cache ALL IO's as I want it to accelerate large write performance.

I am using a TVS-872N with 8x 8TB drives in RAID6 (with one being a spare, 7 active drives).

I don't see any way to adjust the fallow settings now in QTS 5.0.1. There has got to be some way to edit the configuration (at the root shell) of whatever QNAP is using to implement SSD cache, to increase the rate at which it flushes dirty cache items.

There's a command /sbin/qcli_cache, but this doesn't expose anything useful either it seems

Code: Select all

[/sbin] # ./qcli_cache --help

QCLI 5.0.1 20230322, QNAP Systems, Inc.

-v --version, display the version of QCLI and exit.

-h --help, print this help.

-i --ssdcachevolumeinfo, show ssd cache volume information.

-c --createssdcachevolume, create ssd cache volume.

-r --removessdcachevolume, remove SSD Cache volume.

-S --setssdcachevolume, set Enabled/Disabled SSD Cache volume.

-b --ssdcachebypassthreshold, show ssd cache bypass threshold(KB).

-B --setssdcachebypassthreshold, set ssd cache bypass threshold(KB).

-p --ssdcacheportinfo, show ssd cache port information.

-a --addssdcachedrive, add SSD Cache drive.

-s --cachesetting, show SSD Cache Enabled/Disabled volume and iSCSI LUN list.

-e --setssdcachevolume, set Enabled/Disabled SSD Cache for each volume/iSCSI LUN.

[/sbin] # ./qcli_cache -s

BLV_ID Type SSDCache Alias

1 Volume Enabled DataVol1

2 Volume Enabled public

3 Volume Disabled surveillance

4 Volume Enabled timemachine

5 Volume Enabled software

6 Volume Enabled mMedia

8 Volume Enabled manualbackups

10 Volume Enabled summerhome

11 Volume Enabled mKidStuff

12 Volume Enabled mConcerts

13 Volume Enabled mTVShows

14 Volume Enabled mMovies

7 Volume Disabled bryanhome

9 Volume Disabled DataVol2

[/sbin] # ./qcli_cache -p

SSDCache_support SSDCache_port_count SSDCache_maxsize SSDCache_port

yes 10 16384 GB 1,2,3,4,5,6,7,8,9,10

[/sbin] # ./qcli_cache -b

bypass_threshold

0

[/sbin] # ./qcli_cache -i

volumeID poolID RAIDLevel Cache_algorithm cache_type cache_mode cache_flushing Hit_read Hit_write Capability Allocated enable Status PDlist

15 256 1 LRU Read-Write All I/O -- 48 % 71 % 1.69 TB 97 % Enabled Ready 00170001,00170002 So, I got to thinking, maybe they implemented caching using LVM and dm-cache/lvmcache...

If they are, I can tune it. See the man page for lvmcache: https://man7.org/linux/man-pages/man7/lvmcache.7.html

Looking in /etc/lvm... there is no profiles directory. And the lvm.conf file doesn't have any useful caching parameters.

When I list my lv's and add the CacheSettings column, its BLANK! vg256/lv256 is my cache volume.

Code: Select all

[/etc/lvm] # lvs -ao+cachesettings

WARNING: duplicate PV G37V38tnIJqF83dfyrx0saxWwBnZw6cr is being used from both devices /dev/drbd3 and /dev/md3

Found duplicate PV G37V38tnIJqF83dfyrx0saxWwBnZw6cr: using existing dev /dev/drbd3

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert CacheSettings

lv1 vg1 Vwi-aot--- 80.00g tp1 100.00

lv11 vg1 Vwi-aot--- 200.00g tp1 63.59

lv12 vg1 Vwi-aot--- 2.50t tp1 89.27

lv13 vg1 Vwi-aot--- 2.50t tp1 77.00

lv1312 vg1 -wi-ao---- 3.90g

lv14 vg1 Vwi-aot--- 10.50t tp1 89.08

lv15 vg1 Vwi-aot--- 8.50t tp1 86.58

lv2 vg1 Vwi-aot--- 120.00g tp1 72.95

lv4 vg1 Vwi-aot--- 3.50t tp1 54.90

lv5 vg1 Vwi-aot--- 2.50t tp1 52.26

lv544 vg1 -wi------- 147.72g

lv544 vg1 -wi------- 147.72g

lv544 vg1 -wi------- 147.72g

lv6 vg1 Vwi-aot--- 350.00g tp1 92.80

lv7 vg1 Vwi-aot--- 700.00g tp1 78.74

lv9 vg1 Vwi-aot--- 900.00g tp1 84.11

tp1 vg1 twi-aot--- 36.13t 71.91 2.89

[tp1_tierdata_0] vg1 vwi-aov--- 4.00m

[tp1_tierdata_1] vg1 vwi-aov--- 4.00m

[tp1_tierdata_2] vg1 Cwi-aoC--- 36.13t [tp1_tierdata_2_fcorig] 97.72 2.32

[tp1_tierdata_2_fcorig] vg1 owi-aoC--- 36.13t

[tp1_tierdata_2_fcorig] vg1 owi-aoC--- 36.13t

[tp1_tierdata_2_fcorig] vg1 owi-aoC--- 36.13t

[tp1_tmeta] vg1 ewi-ao---- 64.00g

lv10 vg2 Vwi-aot--- 100.00g tp2 100.00

lv1313 vg2 -wi-ao---- 184.00m

lv546 vg2 -wi------- 17.85g

lv8 vg2 Vwi-aot--- 900.00g tp2 100.00

tp2 vg2 twi-aot--- 1.68t 58.15 0.15

[tp2_tierdata_0] vg2 vwi-aov--- 4.00m

[tp2_tierdata_1] vg2 vwi-aov--- 4.00m

[tp2_tierdata_2] vg2 Cwi-aoC--- 1.68t [tp2_tierdata_2_fcorig] 0.00 100.00

[tp2_tierdata_2_fcorig] vg2 owi-aoC--- 1.68t

[tp2_tmeta] vg2 ewi-ao---- 64.00g

lv256 vg256 Cwi-aoC--- 1.69t 97.72 1.04

[lv256_cdata] vg256 Cwi-ao---- 1.69t

[lv256_cmeta] vg256 ewi-ao---- 15.00g

lv545 vg256 -wi------- 17.65g Code: Select all

/sbin/lvchange vg1/tp1_tierdata_2 --cacheiomode remap-only --cachepolicy invalidator

/sbin/lvs vg1/tp1_tierdata_2 -a -ocache_policy --noheadingsOne, I will change /etc/lvm/lvm.conf and uncomment this line and see if it makes any difference.

Code: Select all

# cache_mode = "writethrough"I will also play around with lvchange and the cachepolicy and cachesettings arguments. Something like this:

Code: Select all

lvchange --cachepolicy mq --cachesettings 'low_watermark=0 high_watermark=20 writeback_jobs=16 max_age=120000' vg2/tp2_tierdata_2I am a software engineer who works on storage products for a very big company (bigger and better products than QNAP...). I am very experienced with debugging and customizing Linux.

I feel like there must be ways to tune this more finely than QNAP offers in the GUI.

Last edited by blusk06 on Thu Apr 13, 2023 3:33 am, edited 1 time in total.

QNAP TVS-872N --- 5x 8TB & 3x 10TB RAID6 (7+1 spare)/ 2x 2TB NVMe RAID1 / 2x 2TB NVMe SSD cache RAID1 (QM2) / 16GB Memory / Core i7-9770 / QTS 5.0.1

QNAP TS-870 Pro --- 8x 6TB RAID6 / 16GB memory / Core i7-3770T / QTS 4.6.3

QNAP TS-870 Pro --- 8x 6TB RAID6 / 16GB memory / Core i7-3770T / QTS 4.6.3

-

blusk06

- Getting the hang of things

- Posts: 68

- Joined: Tue Nov 12, 2013 6:56 am

Re: Slow transfer speed due to SSD cache acceleration

QNAP is implementing the SSD cache using LVM/dmcache.

The version of LVM that QNAP uses has obsoleted the older "mq" cache policy, which offers all the tunable parameters in the command in my previous posts. So we can't use that anymore.

Even if you change cachepolicy to mq, it will still use smq, and it will not take any of those options I put above when using mq cachepolicy.

SMQ is newer and it uses a lot less memory per block.

Refer to:

https://www.kernel.org/doc/Documentatio ... licies.txt

So given that we are using LVM, and we must use SMQ, which is not very tunable, I am left with the following options.

It does allow me to change it from writeback to writethrough with this command:

Verify changes using:

And i'll see how that behaves in terms of performance, hits on spinning disk vs ssd, cache fill level.

And I'll also remove the SSD cache and re-create it using FIFO instead of LRU, and see if that makes any difference.

Finally, I'll try it in Write Only mode and see what happens.

Other than that, I dont see much else that can be tuned. Frankly, the more I dig into this, this seems to be more of an LVM/dmcache thing rather than a QNAP thing.

Another note, you can see the syslog message for some details about what device mapper is doing with the cache. This command will "tail" that log and you can watch the messages stream as the system is doing some cache operation like removing it.

The version of LVM that QNAP uses has obsoleted the older "mq" cache policy, which offers all the tunable parameters in the command in my previous posts. So we can't use that anymore.

Even if you change cachepolicy to mq, it will still use smq, and it will not take any of those options I put above when using mq cachepolicy.

SMQ is newer and it uses a lot less memory per block.

Refer to:

https://www.kernel.org/doc/Documentatio ... licies.txt

So given that we are using LVM, and we must use SMQ, which is not very tunable, I am left with the following options.

It does allow me to change it from writeback to writethrough with this command:

Code: Select all

lvchange --cachemode writethrough vg2/tp2_tierdata_2Code: Select all

lvs -ao+cache_policy,cache_settings,cache_modeAnd I'll also remove the SSD cache and re-create it using FIFO instead of LRU, and see if that makes any difference.

Finally, I'll try it in Write Only mode and see what happens.

Other than that, I dont see much else that can be tuned. Frankly, the more I dig into this, this seems to be more of an LVM/dmcache thing rather than a QNAP thing.

Another note, you can see the syslog message for some details about what device mapper is doing with the cache. This command will "tail" that log and you can watch the messages stream as the system is doing some cache operation like removing it.

Code: Select all

tail -f /mnt/HDA_ROOT/.logs/kmsgQNAP TVS-872N --- 5x 8TB & 3x 10TB RAID6 (7+1 spare)/ 2x 2TB NVMe RAID1 / 2x 2TB NVMe SSD cache RAID1 (QM2) / 16GB Memory / Core i7-9770 / QTS 5.0.1

QNAP TS-870 Pro --- 8x 6TB RAID6 / 16GB memory / Core i7-3770T / QTS 4.6.3

QNAP TS-870 Pro --- 8x 6TB RAID6 / 16GB memory / Core i7-3770T / QTS 4.6.3

-

sanke1

- Starting out

- Posts: 44

- Joined: Sun Nov 13, 2011 8:14 pm

Re: Slow transfer speed due to SSD cache acceleration

Same issue on QNAP TS-464 On latest non-beta 5.1 firmware. Once the cache fills up, my nas is dead fish struggling to transfer even at 10 MBs over 10G network. Disable cache and R/W speeds back up to 800 MBs/550 MBs.

Once data was dumped on my NAS, it should immediately start writing back all cache to HDDs isn't it? But I let it hang in there for 2 days and still the cache was 99% full.

Once data was dumped on my NAS, it should immediately start writing back all cache to HDDs isn't it? But I let it hang in there for 2 days and still the cache was 99% full.

-

blusk06

- Getting the hang of things

- Posts: 68

- Joined: Tue Nov 12, 2013 6:56 am

Re: Slow transfer speed due to SSD cache acceleration

I've gotten to where I disable/enable cache after I do big data dumps just to force it to flush the cache. Annoying. I'm on 5.1 now too.

QNAP TVS-872N --- 5x 8TB & 3x 10TB RAID6 (7+1 spare)/ 2x 2TB NVMe RAID1 / 2x 2TB NVMe SSD cache RAID1 (QM2) / 16GB Memory / Core i7-9770 / QTS 5.0.1

QNAP TS-870 Pro --- 8x 6TB RAID6 / 16GB memory / Core i7-3770T / QTS 4.6.3

QNAP TS-870 Pro --- 8x 6TB RAID6 / 16GB memory / Core i7-3770T / QTS 4.6.3

-

zipler

- Know my way around

- Posts: 109

- Joined: Fri Feb 20, 2009 5:15 am

Re: Slow transfer speed due to SSD cache acceleration

I just found this topic after years of dealing with a slow NAS. I have played around with disabling the SSD cache, and my performance issues seem to go away... Its amazing how this could be the source of so much frustration.

I am running a 1273-URP with 64GB ram, 8x6GB 7200RPM drives and 2 internal m.2 SSD's... When I turn on cache, it seems to perform great.... I get 300MBps transfers in windows.... After a few hours/days, I am getting 20-30MBps.

Without the SSD's I get about 175MBps. It would be great if it would maintain the 300MBps.

Its so bad the Web UI of the QNAP feels unresponsive. I have started looking at TrueNAS replacements... But am not ready to purchase another NAS yet while this one is still fairly new. NVME might be an option, but I don't want to spend more money trying to improve the performance to find out later it doesn't help...

Again - thank you all for your posts!

I am running a 1273-URP with 64GB ram, 8x6GB 7200RPM drives and 2 internal m.2 SSD's... When I turn on cache, it seems to perform great.... I get 300MBps transfers in windows.... After a few hours/days, I am getting 20-30MBps.

Without the SSD's I get about 175MBps. It would be great if it would maintain the 300MBps.

Its so bad the Web UI of the QNAP feels unresponsive. I have started looking at TrueNAS replacements... But am not ready to purchase another NAS yet while this one is still fairly new. NVME might be an option, but I don't want to spend more money trying to improve the performance to find out later it doesn't help...

Again - thank you all for your posts!

-

djmcnz

- Starting out

- Posts: 15

- Joined: Sat Apr 29, 2023 10:37 am

Re: Slow transfer speed due to SSD cache acceleration

Same here.zipler wrote: ↑Sat Nov 11, 2023 4:37 pm I just found this topic after years of dealing with a slow NAS. I have played around with disabling the SSD cache, and my performance issues seem to go away... Its amazing how this could be the source of so much frustration.

...

Its so bad the Web UI of the QNAP feels unresponsive.

I can't believe that I've fought with performance problems with my TS-364 for a year now, and seem to have fixed them entirely by simply disabling the SSD cache.

This is a joke and QNAP should be ashamed for not either fixing their poor implementation, or making it very, very clear that it could do more harm than good.

FWIW, SSD cache problems are not fixed as of the date of this post and QTS 5.1.4.2596.

-

horst99

- First post

- Posts: 1

- Joined: Sat Mar 13, 2021 11:58 pm

Re: Slow transfer speed due to SSD cache acceleration

I went thru these problems myself and found a few things to be taken into consideration.

1st - SSDs need a much longer time to write, than to read. There is a mechanism in the SSDs, which allows to mark a sector as invalid/deleted. The real delete/cleaning process takes time. Therefore you provide a kind of 'overprovisioning' with free space. 'Overprocisioning' is a bad description.

2nd - Overprovisioning in fileservers means to show users in total more space, than there is really available. Statistics show, that user do not need so much free space, they want to see on their drives. Overprovisioning means to fool them. A few will need that space and that will not harm total space, because most users do not need it.

3rd - Writing to SSDs works fast, if the drive is freshly formatted. Deleting used sectors mean to physically clean the cells. And that takes far longer than to read them. Therefore SSD disks mark only deleted sectors without really deleting them. Real deletion takes 3 to 10 times longer than reading from that cell. The controller on the SSD therefore provides cleaned cells for fast writes. When the controller finds idle time, it really deletes the cells and brings them in a 'write fast' state.

The 'overprovisioning' in the cache means to keep a certain amount of space free, so that the write speed on the disk is not slowed down due to slow deletion of sectors. The disk will write to the already deleted space and will later physically delete the 'overprovisioning' space.

There is the 'SSD Profiling-Tool' for the cache, with which you can check out, how slow the disk is with really deleting the sectors physically. Old SATA SSD disks typically need 50% free space to keep up with the speed. New NVMe M.2 drives (Samsung SSD 980 PRO 2TB) reach 90% speed with 10-20% of overprovisioning space.

1. Note: Even when you use the Read-Cache only, the cache has to delete regularly the overwritten sectors to keep the cache up to date. And this slows the cache down.

2. Note: If you use the Write-Cache you have to configure at least the same secure RAID configuration as you HDDs. Because this cache is not regularly written in seconds to the disks. Instead the cache modifies the HDDs into Hybrid Disks, where sectors in flash memory are used the same way as on magnetic plates.

1st - SSDs need a much longer time to write, than to read. There is a mechanism in the SSDs, which allows to mark a sector as invalid/deleted. The real delete/cleaning process takes time. Therefore you provide a kind of 'overprovisioning' with free space. 'Overprocisioning' is a bad description.

2nd - Overprovisioning in fileservers means to show users in total more space, than there is really available. Statistics show, that user do not need so much free space, they want to see on their drives. Overprovisioning means to fool them. A few will need that space and that will not harm total space, because most users do not need it.

3rd - Writing to SSDs works fast, if the drive is freshly formatted. Deleting used sectors mean to physically clean the cells. And that takes far longer than to read them. Therefore SSD disks mark only deleted sectors without really deleting them. Real deletion takes 3 to 10 times longer than reading from that cell. The controller on the SSD therefore provides cleaned cells for fast writes. When the controller finds idle time, it really deletes the cells and brings them in a 'write fast' state.

The 'overprovisioning' in the cache means to keep a certain amount of space free, so that the write speed on the disk is not slowed down due to slow deletion of sectors. The disk will write to the already deleted space and will later physically delete the 'overprovisioning' space.

There is the 'SSD Profiling-Tool' for the cache, with which you can check out, how slow the disk is with really deleting the sectors physically. Old SATA SSD disks typically need 50% free space to keep up with the speed. New NVMe M.2 drives (Samsung SSD 980 PRO 2TB) reach 90% speed with 10-20% of overprovisioning space.

1. Note: Even when you use the Read-Cache only, the cache has to delete regularly the overwritten sectors to keep the cache up to date. And this slows the cache down.

2. Note: If you use the Write-Cache you have to configure at least the same secure RAID configuration as you HDDs. Because this cache is not regularly written in seconds to the disks. Instead the cache modifies the HDDs into Hybrid Disks, where sectors in flash memory are used the same way as on magnetic plates.

-

davidwyl

- New here

- Posts: 2

- Joined: Fri Jan 26, 2024 10:26 am

Re: Slow transfer speed due to SSD cache acceleration

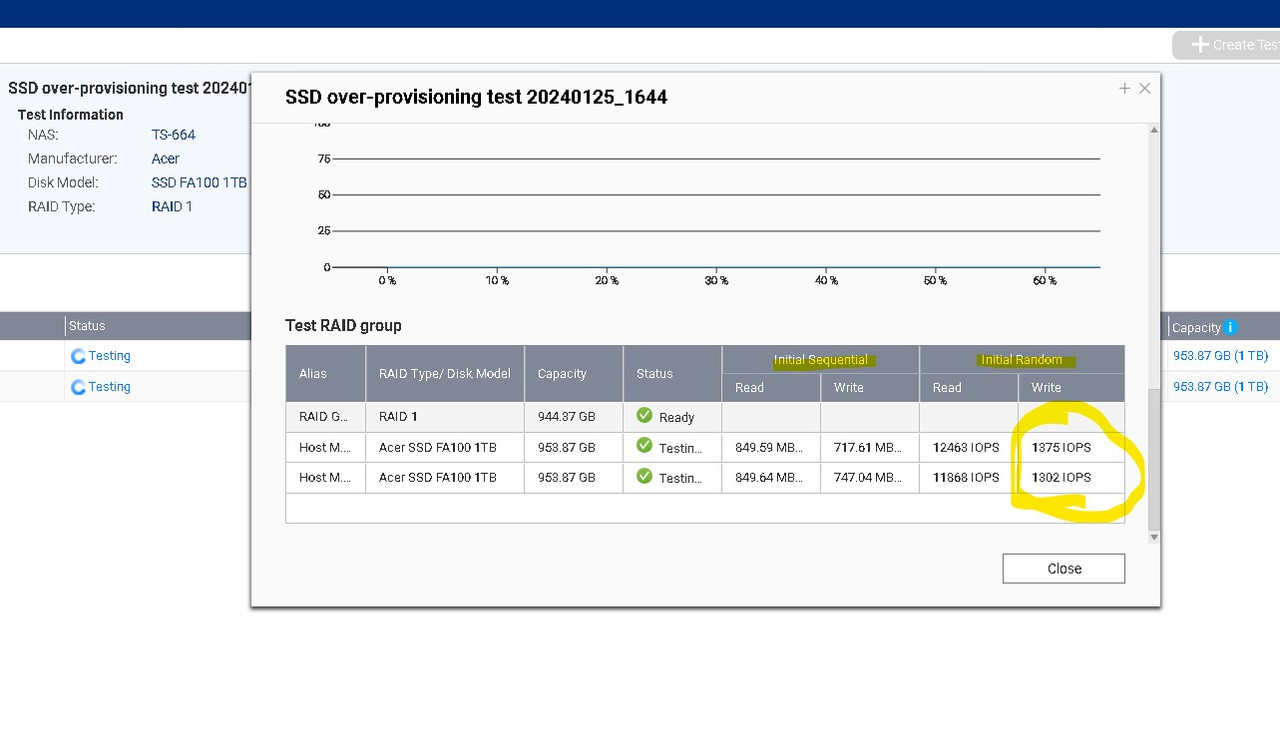

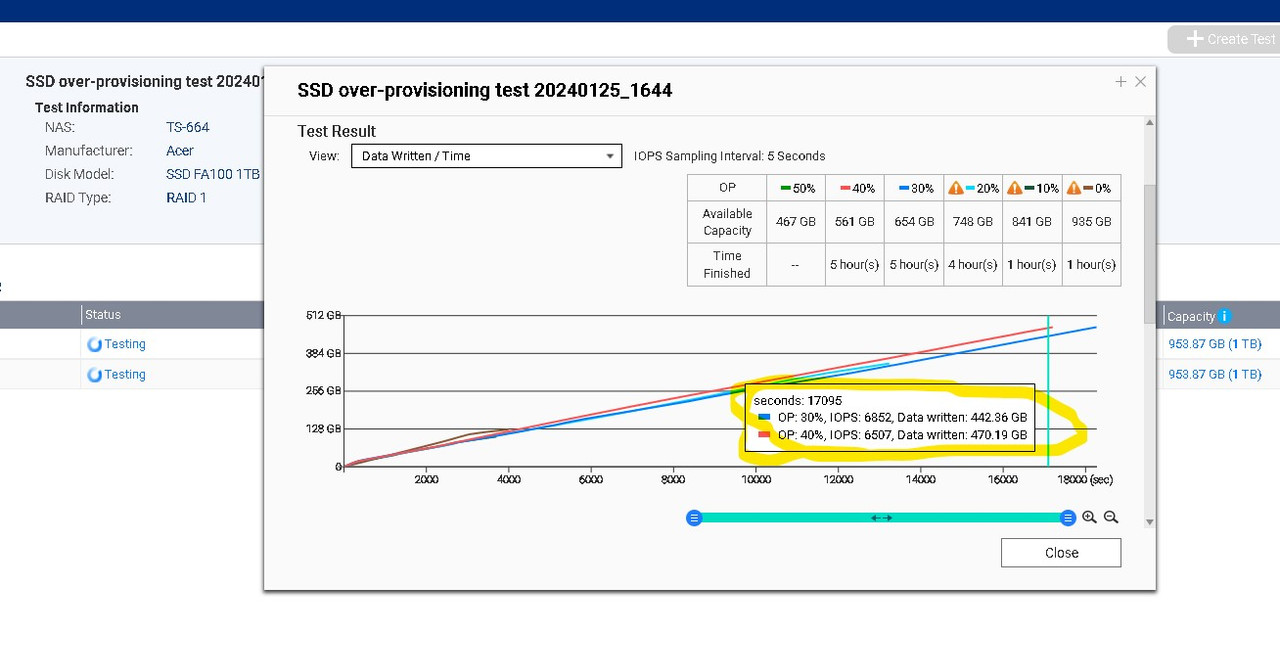

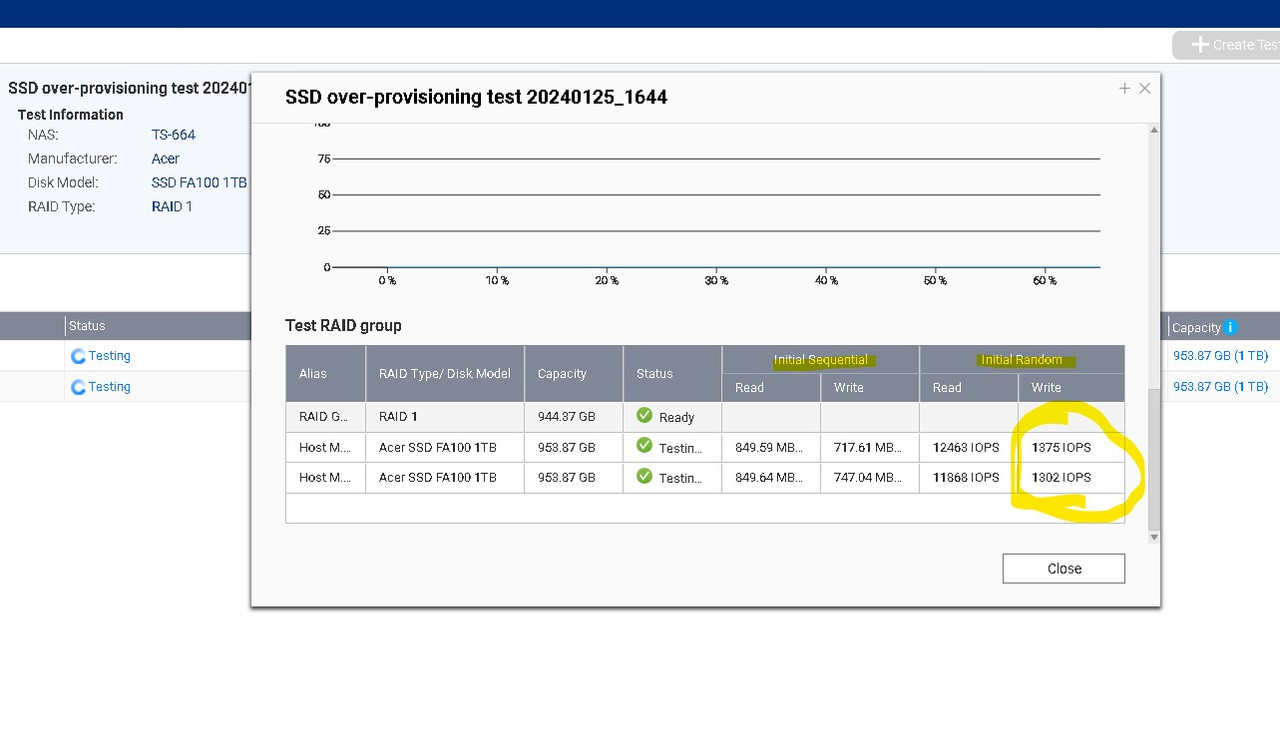

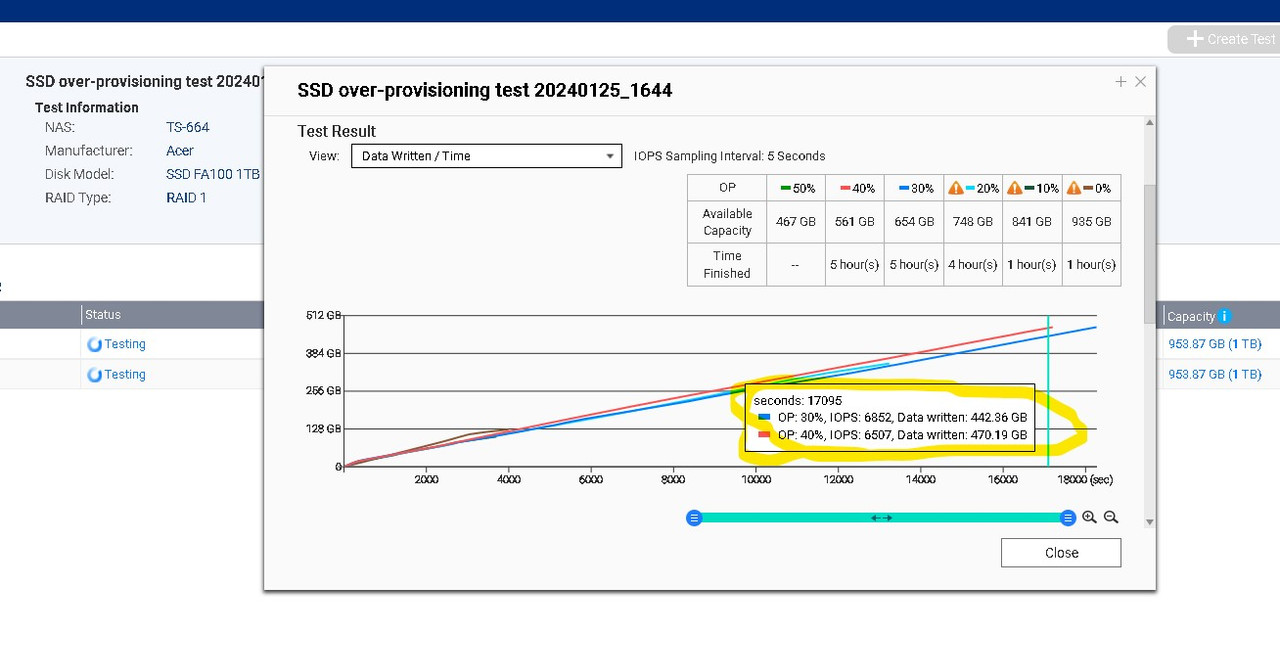

just setup ts-664, bought two acer fa100 1tb as a ssd cache. Run the ssd profilling tool to get an idea how much provisioning to use. from the result i found that the speed is extremely slow.

initial sequential reed / write seem normal , but the initial random write is way too slow. anything i did wrong?

30% over provisioning the speed is only 25MBps

40% over provisioning the speed is only 27MBps

seem like something is not right??? any idea???

initial sequential reed / write seem normal , but the initial random write is way too slow. anything i did wrong?

30% over provisioning the speed is only 25MBps

40% over provisioning the speed is only 27MBps

seem like something is not right??? any idea???